Emotions are often portrayed in sci-fi as the last realm of humans, the only aspect of thought unavailable to most machines. Think of Data’s long quest for emotional experience on Star Trek, or the hard questions faced by Decker in Do Androids Dream of Electric Sheep? (Or Blade Runner, if you're more a film person) . For all their apparent unmarred rationality in stories, robots in real life can’t seem to escape from the emotional attachments and influences of humans. From military troops mourning their lost mechanical comrades to apps like Siri that depend on conversational interaction, humans tend to anthropomorphize AI as having the same feelings they do to some extent. But when asked directly if a computer can have feelings, many people would argue no, because of the inherent rationality assumed of mechanical systems. Is it possible? Could AI ever feel emotion?

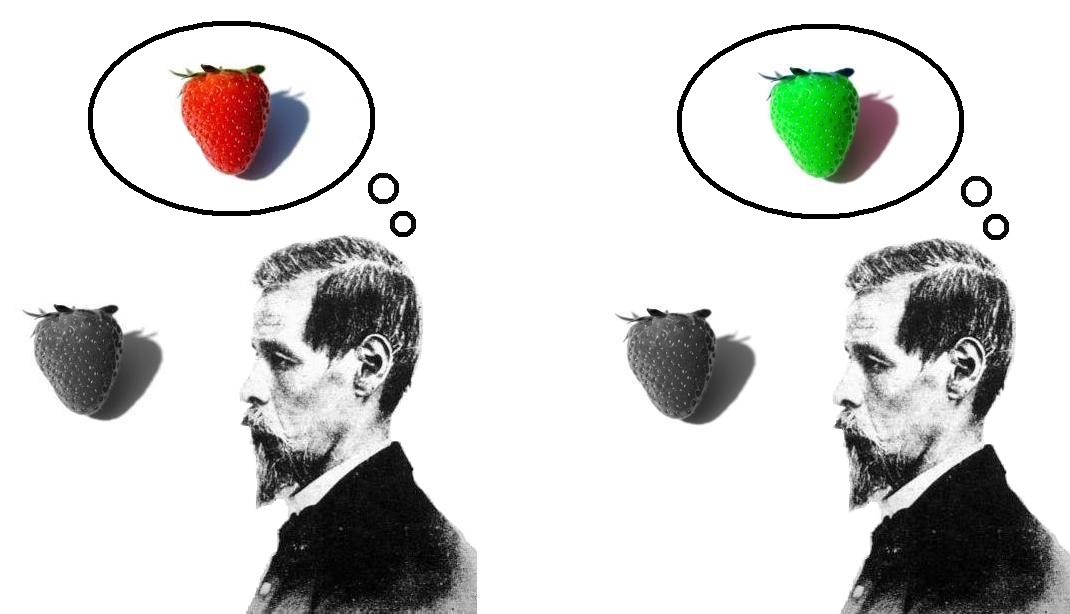

As humans, we define our emotions categorically - we feel happy, or sad, or angry, or so on. How is “happy,” as a category containing who knows how many subcategories of semantics - pleased, content, euphoric - defined? There are a few different ways you could approach a definition -

Emotion as a pre-determined response - The way I feel after I get something I want is “happy.”

Emotion as a physical state - Elevated serotonin and endocannabinoids are “happy.”

Emotion as derived from desire - Not needing or wanting to change anything about my current state is “happy.”

|

| Woman? Salad? Definitely happy. |

To most people these definitions probably seem roundabout and strange. Humans have a unique gift of language that allows happiness to encompass all of these things, it’s typical definition boiling down to the abstract “feeling good.” But when exploring the concept of emotion in an artificial system, one without the convenient crutch of human consciousness and understanding, we have to look for more concrete rules.

By using any of these definitions I’ve listed above, I’d argue that yes, an artificially intelligent system could feel emotion. It’d be easy for an event of lip a switch in an AI’s programming, setting “mood = ‘happy.’” Bit-and-byte facsimiles of the chemical changes in the human brain that correlate with emotional response would be simple to measure and categorize. And for a system that monitors its goals constantly, the third definition of emotion would be quite useful - I’m reminded of THIS STUDY, in which humans with a damaged center of emotion in their brain were rendered incapable of simple decisions like picking a pen to write with, because there was no “rational” distinction between the choices. Emotion could be defined in this way as a wash of small influences in each of our desires and decisions based on current circumstances. AI can have emotions defined in these ways. But, as I’m sure many of you are shouting at your computers right now, that’s not the real question.

A better question is: Could AI ever experience emotion the same way that humans do?

|

This is also a much harder question because we have no reference point. We don’t know what turns a chemical highway into an emotional experience for humans, but we can at least see the same correlation between stimulus in response in dogs, mice, apes, babies, etc. One prime example is the tongue-extended expression that comes with liking a taste - replicated by different species as an instinctive reaction that seems to prove that emotion is not a solely human experience. With AI, there’s no such connection. To assume any would be to anthropomorphize a machine to a dangerous extent. We can program the classification of emotion. We can program the physical qualities of emotion. We can program decisions and goals for an AI that rely on a self-perception of emotion.

But can we program emotion itself?

Or is it really necessary to?

One of the beautiful things that comes from consciousness is the shared experience. We all agree that the sky is blue, the arctic is cold, and the live action Avatar the Last Airbender movie would’ve been terrible, had it ever been created.

|

| Really dodged a bullet there. |

Through language we are able to connect the personal to the universal in this way. But it’s a flawed system. There’s no way to prove, for example, that the blue I see is the same blue you see. Or that the happy I feel is the same one you do. Each of these things are entirely subjective, impossible to measure, and impossible to share without the shaping force of language. If the true, pure essence of my “happy” was your experience of “sad,” who would ever know? We could only define happiness in a truly universal manner as a measurable response, physical, behavioral, or cognitive, to whatever experiences we had shared.

So it doesn’t matter whether the emotions an AI experiences are the same as ours, because we’d never know. It would only matter whether these objective, concrete definitions of emotion held true. These are the only things we can measure. Anything further is an argument on consciousness, humanity, and the ineffable, and their definitions, which have been debated for centuries. These concepts too will need concrete restrictions as AI becomes more and more prevalent in our human world. AI means a new era of philosophy in which questions are no longer enough. We can debate the existence of qualia or the Chinese Room experiment all day (and in a later post.) But beyond philosophical misgivings, does it matter if an AI’s blue is the same as yours, if you can both tell me the color of the sky? In such abstract terms, an AI can experience emotion - but only as much as we’re willing to attribute to it.

Questions? Comments? Arguments? Please add below, I'd love to see what you have to say.