So, I decided to take matters into my own hands, and try out the first problem mentioned by the book: Tic -Tac-Toe.

WARNING: THE FOLLOWING SECTION IS EDUCATIONAL, BUT ALSO REALLY LONG

SKIP TO THE PROGRAM PICTURES IF YOU JUST WANT TO HEAR ABOUT MY SOLUTION

The book uses Tic-Tac-Toe to introduce the concept of a heuristic search. A heuristic is a way of gauging whether or not a potential solution to a problem is a good one. For example, if you were trying to walk downtown in a foreign city, a good heuristic would be to take a path that goes toward the tall buildings. This might not always be a perfect method(some cities are more labyrinthian than others), but it will usually do a good job of finding a solution for your problem. Our thinking is completely tied up in heuristics - they're the rules you make up about how you run your life, whether it's how much money you save each month, or the lucky socks you wear to every test.

In the context of Artificial Intelligence, a heuristic search is one way a program can do its thinking.

Let's look at this in terms of Tic-Tac-Toe

The program looks at a problem as a series of states. These are possible states of a Tic-Tac-Toe problem.

Pretty simple, right? You can perform an action on a state to change it to another state - like making a move on the board. Now a solution to this particular type of problem is a progression of actions and states that ends in the program either getting a three-in-a-row, or tying with the opposite player - a "winning" state.

In order to find a solution, the program takes a state, performs an action on it to create a new state, and checks if it's a winning state. If it is, it returns the action it took. Otherwise, it tries a different action.

The most basic kind of thinking is trial-and-error. You generate a random possible action to solve a problem, and if it doesn't work, discard it and try another. This WILL get you a good solution... eventually. For small problems, this might not be an issue. But for big problems with millions upon billions of possible solutions, there's no way.

This is where the heuristic part comes in. I find it useful to imagine an AI as on a map, starting on a base state, with paths to every other possible state representing every possible action it could take. When the AI tries an action, it moves along the path to the new state, which has paths connecting to all of IT'S possible states, and so forth. And every state has an elevation - the higher up it is, the better is fits a heuristic (with the highest being winning states, of course). Your AI's goal then is to find the action from the state it's at that will get it to a higher state (one that better fits the heuristic). This means a lot of heuristic search techniques have funny names like Hill Climbing. The program's ultimate goal is to get to higher (or highest, when possible.) ground.

For example, your Tic-Tac-Toe playing program (assuming computer plays X) might decide that a good state is one that has the most possible, shortest paths to a winning state for X, or, in other words, the most rows, columns, and diagonals with only Xs in them. From a base state, a program with this heuristic will make a move in the middle space as it's action, because the state created is the "highest" state - it gives you 4 possible ways to win. It's the best possible action.

At this point it's important to note, however, that finding a best possible action isn't always possible - for problems where you can take a lot of different actions, it might take too much time. For problems where states can be far away from the winning state, you can get stuck on a 'ridge' or a 'plateau' where you've found the best possible state for your local area, but there's one much better many, many actions away. This is the reason heuristic search algorithms can get so complicated, and why so many different ones exist. AI's greatest achievement and it's greatest challenge is dealing with situations where 'best' isn't possible.

However, Tic-Tac-Toe is a relatively simple problem - one small enough that it COULD be solved through trial and error, we just want it to be solved faster. For my programs, I decided to combine two different heuristic techniques - Steep Ascent Hill Climbing and Minimaxing.

Steep Ascent Hill Climbing is a heuristic search method where you evaluate all the possible states you could reach from your given state and pick the best one. Because of this, it can take longer for your computer to complete than plain old vanilla hill climbing, which just finds any state that's better than the current one. But it will typically take fewer steps to get to a winning state, and, in the case of a game like Tic-Tac-Toe, a 'good' move really isn't good enough.

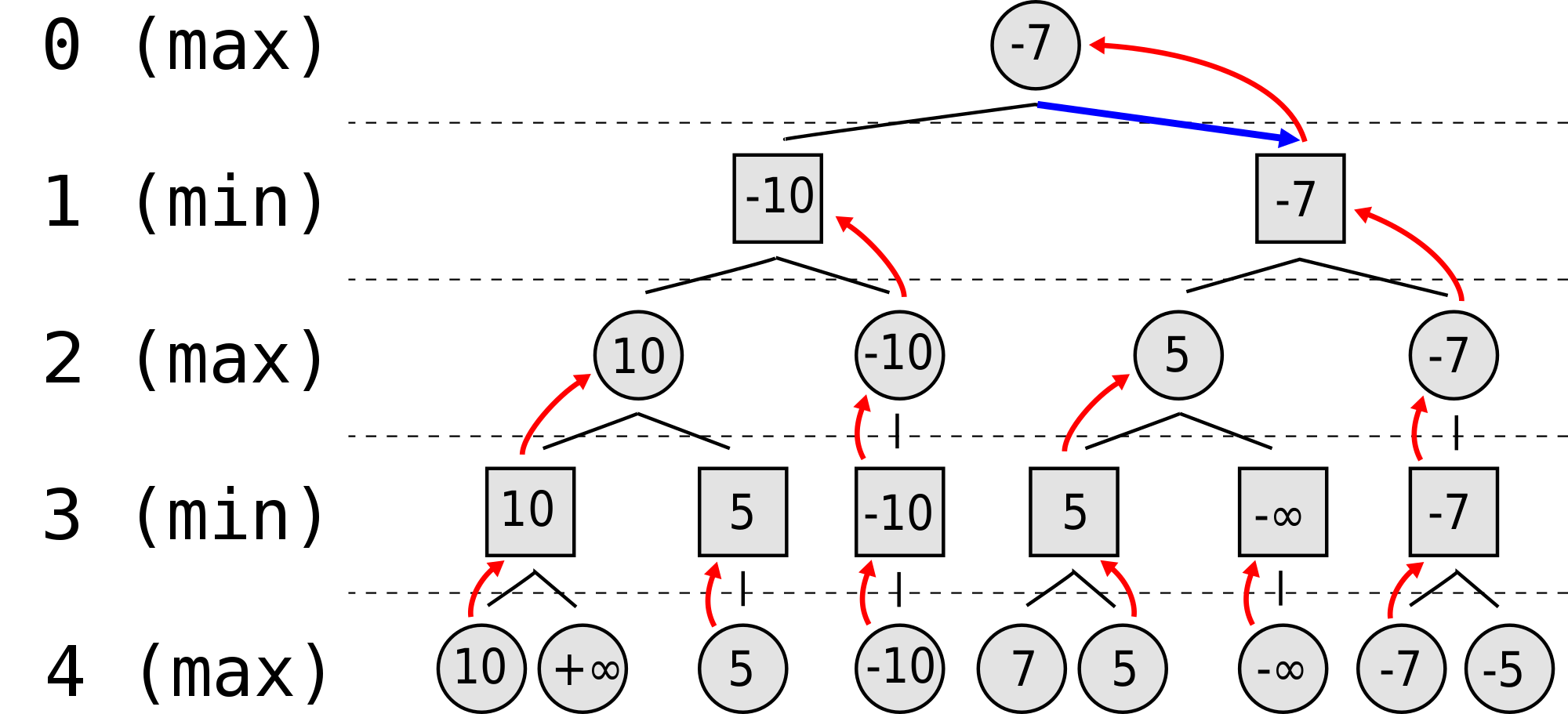

Minimaxing is a a method more specific to game theory. You assume that, for every move each player makes, they are going to try and maximize their own gains and minimize their own losses - while maximizing the losses and minimizing the gains for the opposing player. In true minimaxing, you estimate the number of moves it will take for you to win the game from a given state, and use that to assign the state a score. I combined this approach with a different heuristic to make my program as fast and effective as I could

I actually built the first two iterations of this program back in mid-October, but I was then hit in quick succession with the Girl Scout National Convention and GSLI conference, my Gold Award Project, make-up work, then school, and it's just been crazy ever since.

The first draft took about a week to build. I coded everything in Ruby.

Basically, the computer takes the tic tac toe board, uses is to test all the possible moves it can make, and picks the one that gets it the highest score.

It played Tic Tac Toe, for sure! It just... didn't play very WELL. I used a heuristic that scored the board on the number of open rows, columns, and diagonals, but it just didn't give the weight needed to winning states. So I tried again.

I found a promising looking heuristic here. This one made use of an array to store all the possible ways to win, and another to score the state based on how many ways X and O can win on the board. But even better, I gave the computer the ability to look one move further ahead, and try to guess how the opponent would respond to its move. This was minimaxing - the computer assumed the opposing player would play their best, and could then use its move to put the opponent in the worst possible position.

This program worked GREAT! It was almost unbeatable!

But, it had one weakness.

It could only look two moves ahead, and beating THIS trap required the program to look ahead 4.

In the end, I found it was easier to adjust my heuristic than to double up on the moves my program watched. I made sure it treated getting these traps - the three corners, or this triangle - as winning when scoring the boards, but NOT when checking for a win. In this way, the program finally worked.

Next, I'm working on trying to make a program that can learn the game on it's own from repeated trials - but that's a whole other ball game. For now, I'm just happy with how my first foray into AI programming worked out!

If you have questions/or comments don't hesitate to ask! I'm always happy for feedback.

No comments:

Post a Comment